|

个人信息Personal Information

副教授

博士生导师

硕士生导师

性别:男

毕业院校:立命馆大学

学位:博士

所在单位:软件学院、国际信息与软件学院

学科:软件工程

办公地点:大连理工大学开发区校区信息楼323A

联系方式:0411-62274393

电子邮箱:xurui@dlut.edu.cn

-

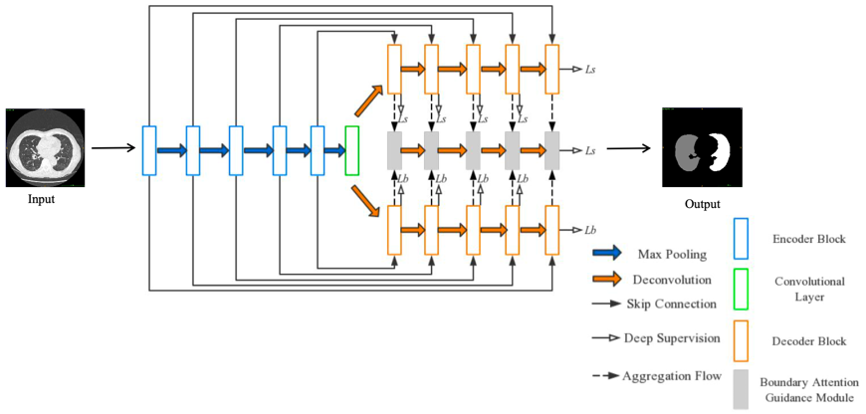

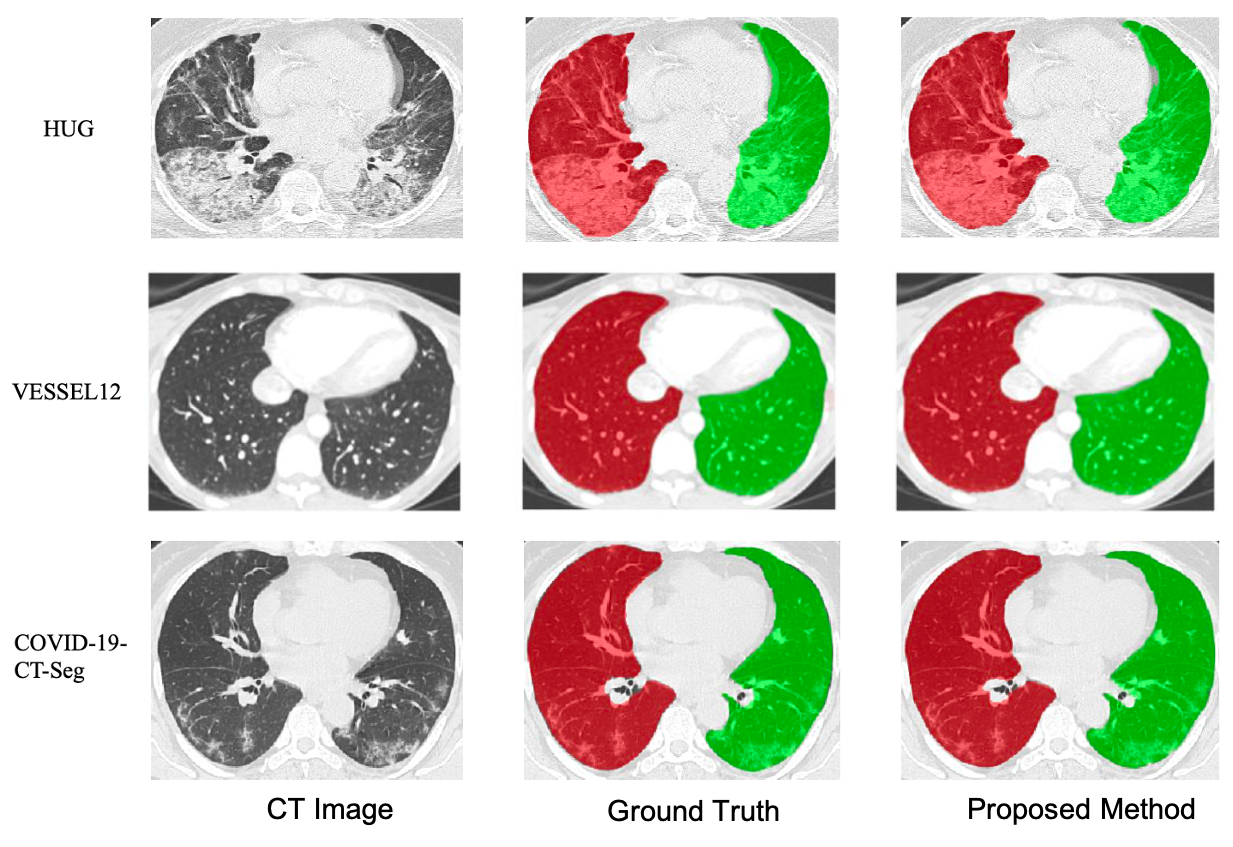

Lung segmentation on CT images is a crucial step for a computer-aided diagnosis system of lung diseases. The existing deep learning based lung segmentation methods are less efficient to segment lungs on clinical CT images, especially that the segmentation on lung boundaries is not accurate enough due to complex pulmonary opacities in practical clinics. In this paper, we propose a boundary-guided network (BG-Net) to address this problem. It contains two auxiliary branches that seperately segment lungs and extract the lung boundaries, and an aggregation branch that efficiently exploits lung boundary cues to guide the network for more accurate lung segmentation on clinical CT images. We evaluate the proposed method on a private dataset collected from the Osaka university hospital and four public datasets including StructSeg, HUG, VESSEL12, and a Novel Coronavirus 2019 (COVID-19) dataset. Experimental results show that the proposed method can segment lungs more accurately and outperform several other deep learning based methods.

[1] Rui Xu, Yi Wang, Tiantian Liu, Xinchen Ye*, Lin Lin, Yen-wei Chen, Shoji Kido, Noriyuki Tomiyama, "BG-Net: Boundary-Guided Network for Lung Segmentation on Clinical CT Images", International Conference on Pattern Recognition (ICPR), Mila, Italy, January 10-15, 2021. (CCF-C)

[2] Rui Xu, Jiao Pan, Xinchen Ye, Yasuhi Hirano, Shoji Kido, Satoshi Tanaka, A pilot study to utilize a deep convolutional network to segment lungs with complex opacities, 2017 Chinese Automation Congress :3291-3295, 2017.

-

Precise classification of pulmonary textures is crucial to develop a computer aided diagnosis (CAD) system of diffuse lung diseases (DLDs). Although deep learning techniques have been applied to this task, the classification performance is not satisfied for clinical requirements, since commonly-used deep networks built by stacking convolutional blocks are not able to learn discriminative feature representation to distinguish complex pulmonary textures. For addressing this problem, we design a multi-scale attention network (MSAN) architecture comprised by several stacked residual attention modules followed by a multi-scale fusion module. Our deep network can not only exploit powerful information on different scales but also automatically select optimal features for more discriminative feature representation. Besides, we develop visualization techniques to make the proposed deep model transparent for humans. The proposed method is evaluated by using a large dataset. Experimental results show that our method has achieved the average classification accuracy of 94.78% and the average f-value of 0.9475 in the classification of 7 categories of pulmonary textures. Besides, visualization results intuitively explain the working behavior of the deep network. The proposed method has achieved the state-of-the-art performance to classify pulmonary textures on high resolution CT images.

[1] Rui Xu, Zhen Cong, Xinchen Ye*, Yasushi Hirano, Shoji Kido, Tomoko Gyobu, Yutaka Kawata, Osama Honda, Noriyuki Tomiyama, Pulmonary Textures Classification via a Multi-Scale Attention Network, IEEE Journal of Bimedical and Health Informatics 24 (7) : 2014-2052, 2020. (中科院1区Top)

[2] Rui Xu, Zhen Cong, Xinchen Ye*, Yasushi Hirano, Shoji Kido, Pulmonary Textures Classification Using a Deep Neural Network with Appearance and Geometry Gues, IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2018), Calgary, Alberta, April 15-20 2018. (CCF-B)

-

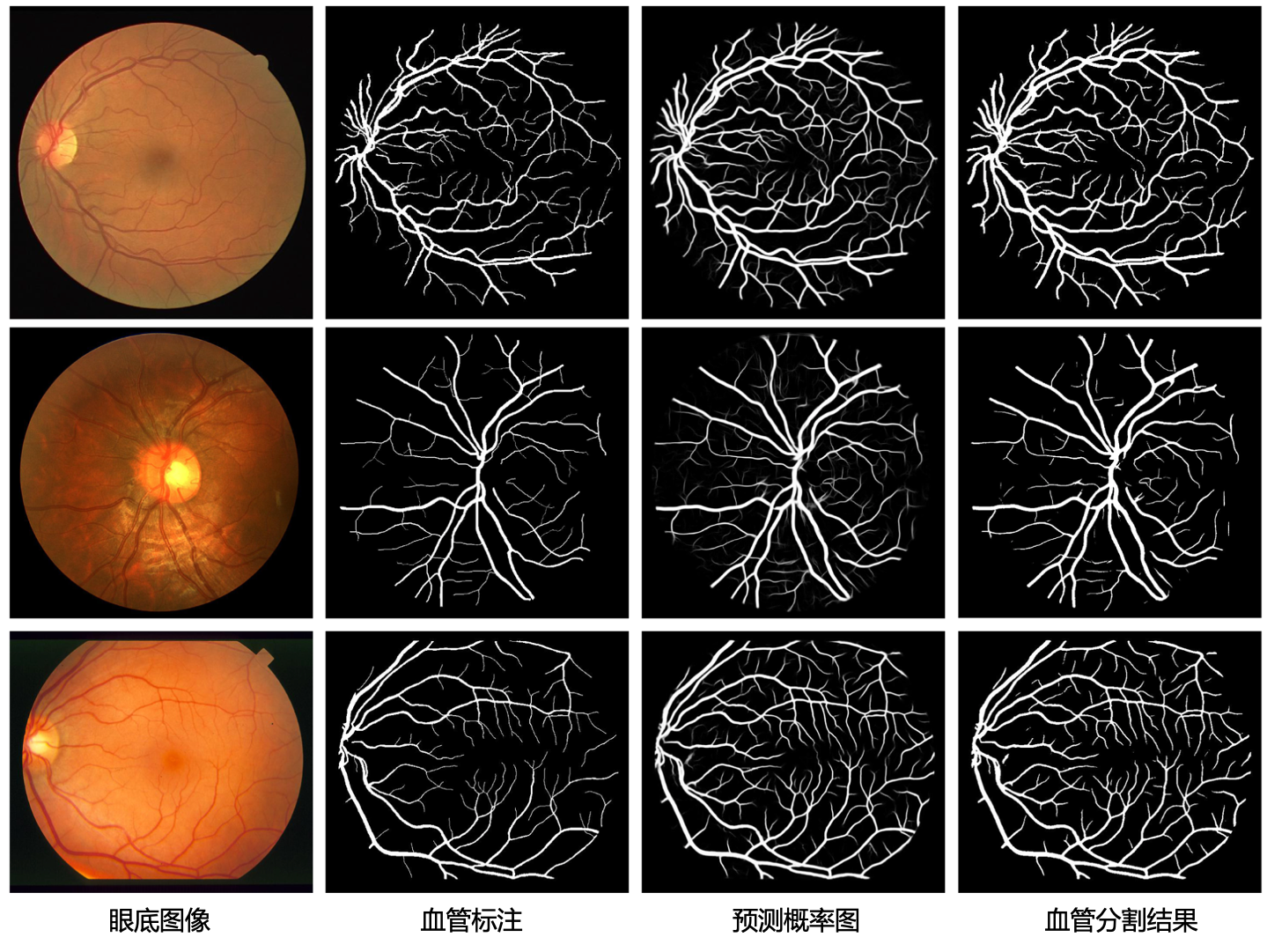

该研究的目标是获取眼底视网膜血管的高精度分割结果。眼底视网膜血管与多种疾病相关,如青光眼、糖尿病和高血压等,均会使眼底视网膜血管的形态和结构发生改变。对视网膜血管进行自动分析,建立眼底视网膜图像的计算机辅助诊断系统,可辅助医生对这些疾病进行高效和精确的诊断。其中的一个核心关键是对眼底视网膜图像进行高精度的血管分割。针对这一问题,项目组之前已获得了两项研究成果,提出了两种深层卷积神经网络的方法,即多尺度深监督网络(PCM 2018,CCF-C)[1]和语义及多尺度聚合网络(ICASSP 2020,CCF-B)[2],提高了视网膜眼底血管分割的精度。然而,分割得到的视网膜眼底血管树中存在较多的断点,不利于后期的血管形态和结构分析,将影响眼底视网膜图像计算机辅助诊断系统的鲁棒性和可靠性。视网膜血管树的断点问题,在基于深层卷积网络的视网膜血管分割方法中广泛存在,在世界范围内并未得到研究人员的充分重视,相关前期研究较少。因此,项目组针对视网膜血管树的断点问题进行研究,提出了递归语义引导网络(MICCAI'20,顶级医学图像处理国际会议)[3],减少视网膜血管分割中的断点,提高分割血管树的连接性。

项目组以三层U-Net为基础网络模型,提出了语义引导模块,用深层网络中丰富的语义信息指导网络学习,挖掘表示能力更强的血管特征,以克服图像拍摄时不同光照和眼底病变对血管提取的不利影响,提高分割血管的连接性。此外,分割算法中还引入了递归迭代的优化方式,将分割结果反复送入同一网络中进行优化,在不增加额外网络参数和网络训练难度的情况下,使血管分割精度和连接性不断提高。实验结果表明,本方法在通常的血管分割评价指标上(AUC,SE,SP)与已有最先进的视网膜血管分割方法相似,但在血管树连接性的评价指标上(INF,COR)大大高于已有方法,体现了递归语义引导网络的有效性。

[1] Rui Xu, Guiliang Jiang, Xinchen Ye, Yen-Wei Chen, Retinal Vessel Segmentation via Multiscaled Deep Guidance, Pacific Rim Conference on Multimedia 2018 (PCM 2018), Hefei, China, September 21-22, 2018.

[2] Rui Xu, Xinchen Ye, Guiliang Jiang, Tiantian Liu, Liang Li, Satoshi Tanaka, Retinal Vessel Segmentation via a Semantics and Multi-Scale Aggregation Network, IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2020), Virtual Barcelona, May 4-8, 2020.

[3] Rui Xu, Tiantian Liu, Xinchen Ye, Yen-Wei Chen*, Lin Lin, Yen-Wei Chen, Boosting Connectivity in Retinal Vessel Segmentation via a Recursive Semantics-Guided Network, International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2020), Virtual Lima, Peru, October 4th-8th, 2020. (arXiv Version : https://arxiv.org/abs/2004.12776)

-

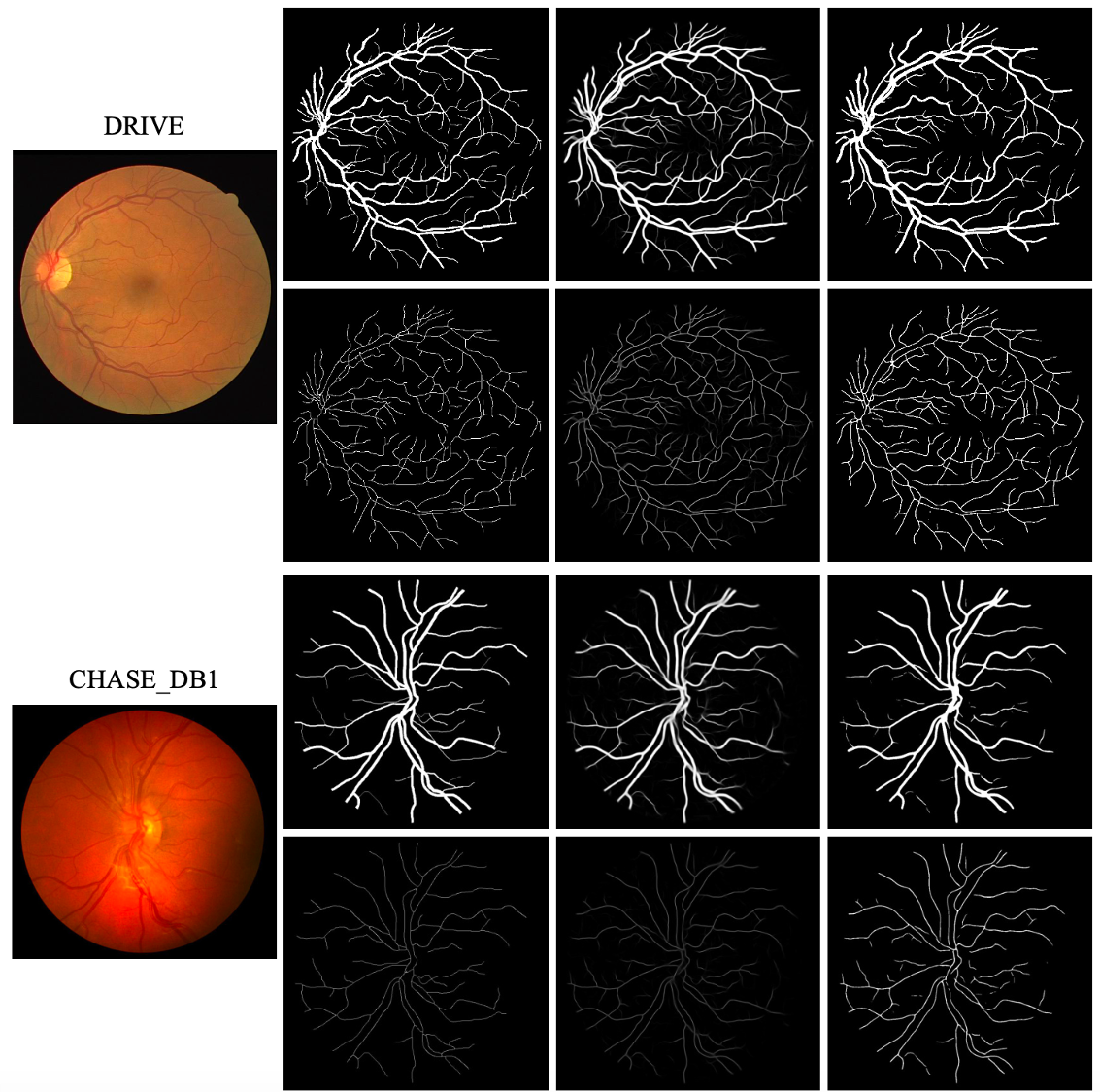

Retinal vessel segmentation and centerline extraction are crucial steps in building a computer-aided diagnosis system on retinal images. Previous works treat them as two isolated tasks, while ignoring their tight association. In this paper, we propose a deep semantics and multi-scaled cross-task aggregation network that takes advantage of the association to jointly improve their performances. Our network is featured by two sub-networks. The forepart is a deep semantics aggregation sub-network that aggregates strong semantic information to produce more powerful features for both tasks, and the tail is a multi-scaled cross-task aggregation sub-network that explores complementary information to refine the results. We evaluate the proposed method on three public databases, which are DRIVE, STARE and CHASE DB1. Experimental results show that our method can not only simultaneously extract retinal vessels and their centerlines but also achieve the state-of-the-art performances on both tasks.

[1] Rui Xu, Tiantian Liu, Xinchen Ye*, Fei Liu, Lin Lin, Liang Li, Satoshi Tanaka, Yen-Wei Chen, Joint Extraction of Retinal Vessels and Centerlines Based on Deep Semantics and Multi-Scaled Cross-Task Aggregation, IEEE Journal of Biomedical and Health Informatics, 10.1109/JBHI.2020.3044957, 2020.(中科院1区Top期刊,已接收)

-

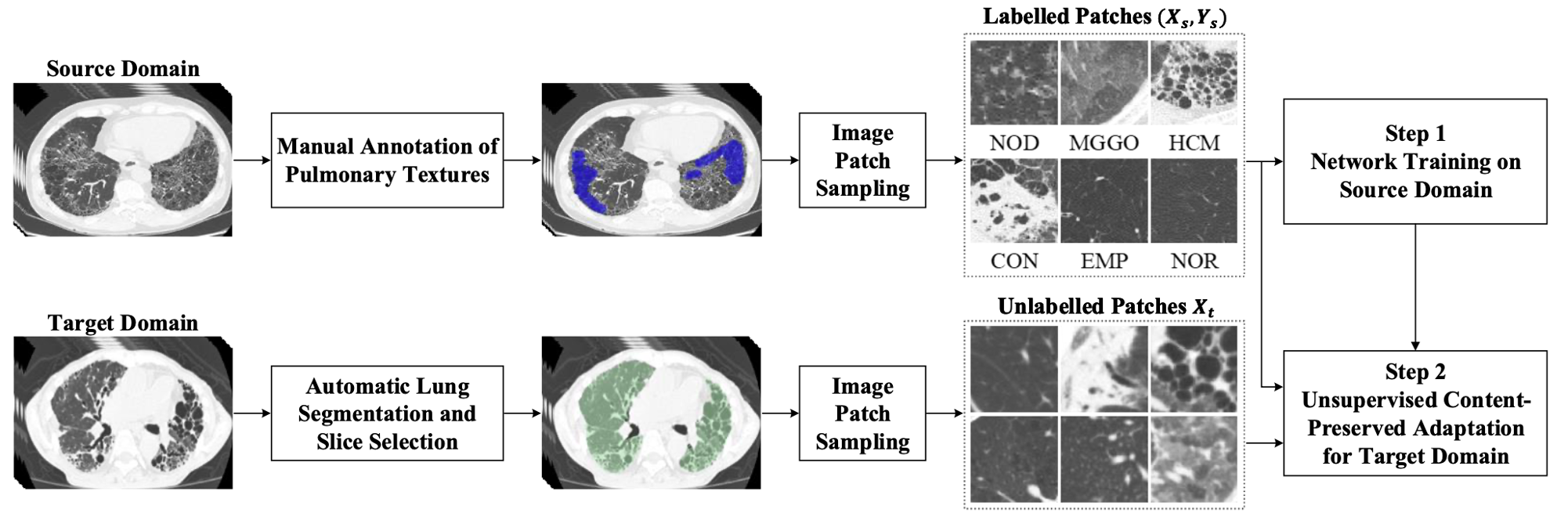

Deep network based methods have been proposed for accurate classification of pulmonary textures on CT images. However, such methods well-trained on CT data from one scanner can- not perform well when they are directly applied to the data from other scanners. This domain shift problem is caused by different physical components and scanning protocols of different CT scanners. In this paper, we propose an unsu- pervised content-preserved adaptation network to address this problem. Our method can make a previously well-trained deep network to be adapted for the data of a new CT scan- ner and does not require the laboring annotation to delineate pulmonary texture regions on the new CT data. Extensive evaluations have been carried on images collected from GE and Toshiba CT scanners and show that the proposed method can alleviate the performance degradation problem of classifying pulmonary textures from different CT scanners.

[1] Rui Xu, Zhen Cong, Xinchen Ye*, Shoji Kido, Noriyuki Tomiyama, Unsupervised Content-Perserved Adaptation Network for Classification of Pulmonary Textures from Different CT Scanners, IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2020), Virtual Barcelona, May 4-8 2020. (CCF-B)

-

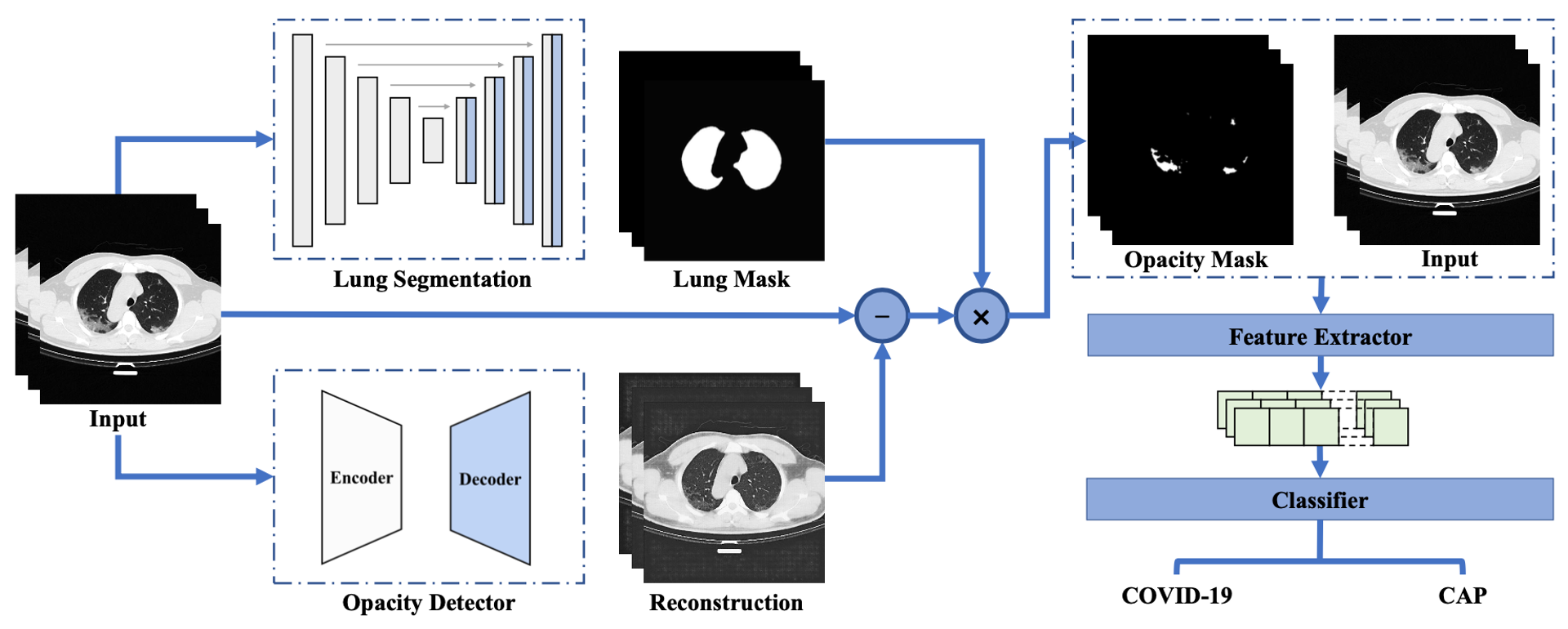

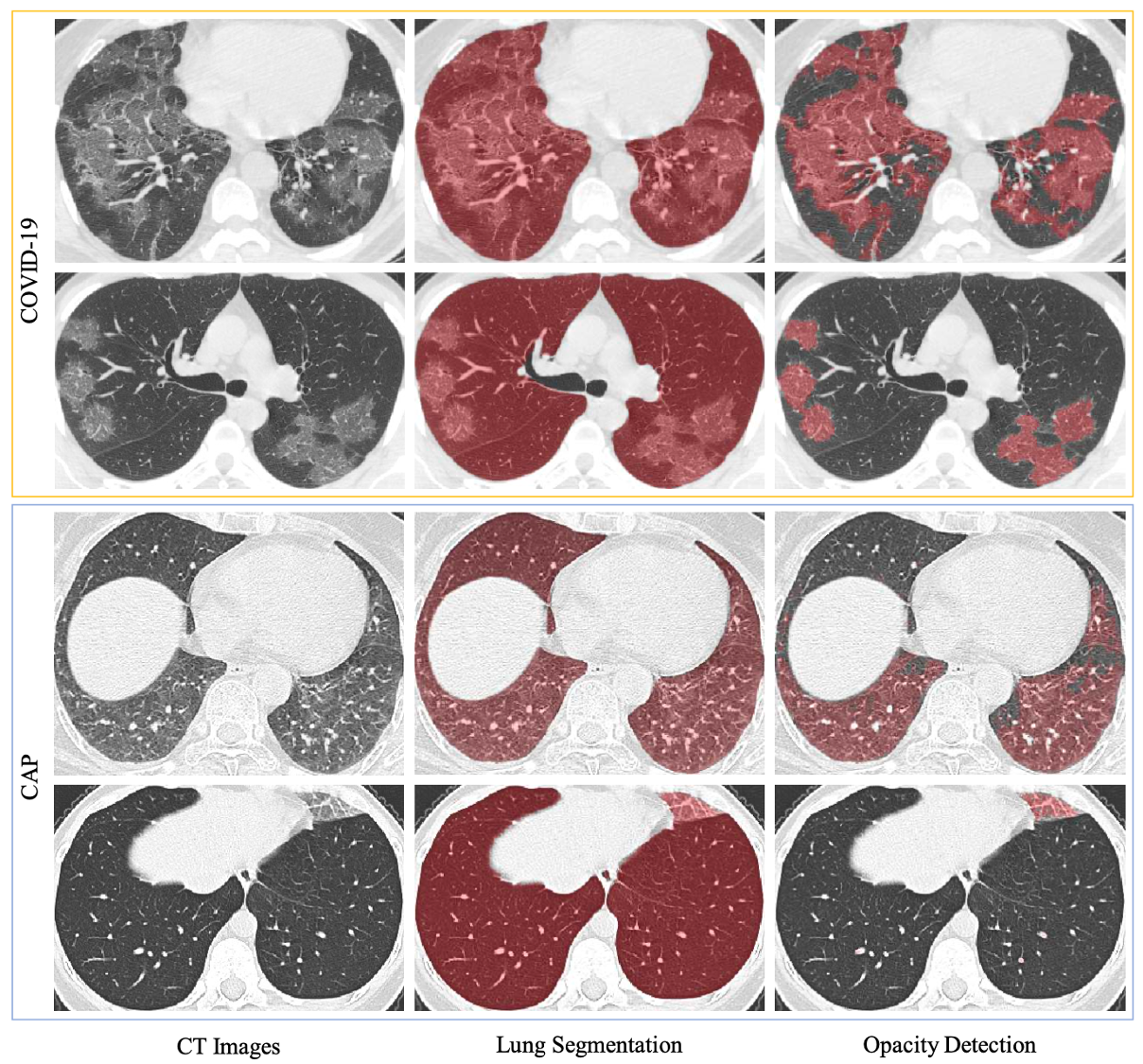

COVID-19 emerged towards the end of 2019 which was identified as a global pandemic by the world heath organization (WHO). With the rapid spread of COVID-19, the number of infected and suspected patients has increased dramatically. Chest computed tomography (CT) has been recognized as an efficient tool for the diagnosis of COVID-19. However, the huge CT data make it difficult for radiologist to fully exploit them on the diagnosis. In this paper, we propose a computer-aided diagnosis system that can automatically analyze CT images to distinguish the COVID-19 against to community-acquired pneumonia (CAP). The proposed system is based on an unsupervised pulmonary opacity detection method that locates opacity regions by a detector unsupervisedly trained from CT images with normal lung tissues. Radiomics based features are extracted insides the opacity regions, and fed into classifiers for classification. We evaluate the proposed CAD system by using 200 CT images collected from different patients in several hospitals. The accuracy, precision, recall, f1-score and AUC achieved are 95.5%, 100%, 91%, 95.1% and 95.9% respectively, exhibiting the promising capacity on the differential diagnosis of COVID-19 from CT images.

[1] Rui Xu, Xiao Cao, Yufeng Wang, Yen-wei Chen, Xinchen Ye*, Lin Lin, Wenchao Zhu, Chao Chen, Fangyi Xu, Yong Zhou, Hongjie Hu, Shoji Kido, Noriyuki Tomiyama, "Unsupervised Detection of Pulmonary Opacities for Computer-Aided Diagnosis of COVID-19 on CT Images", International Conference on Pattern Recognition (ICPR), Mila, Italy, January 10-15, 2021. (CCF-C)