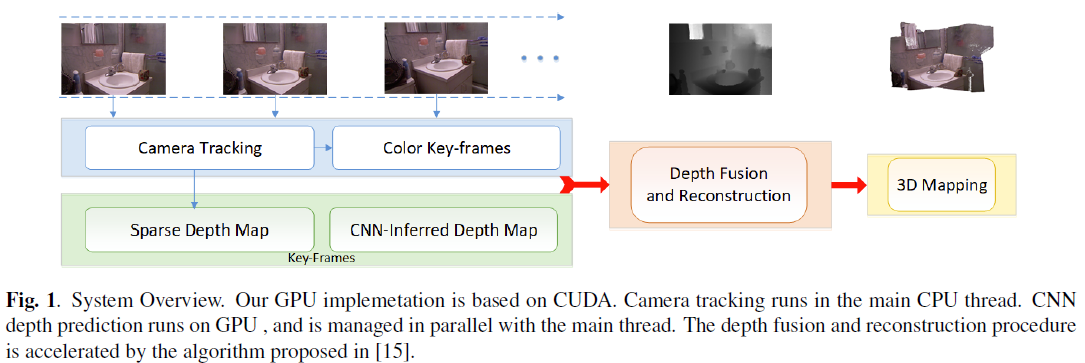

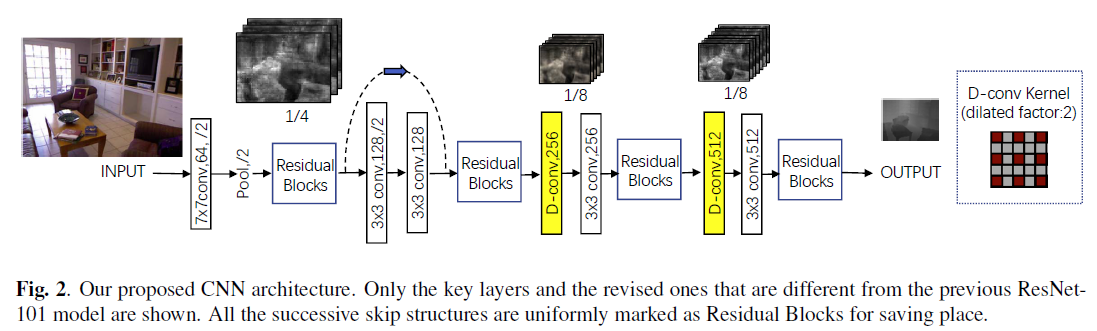

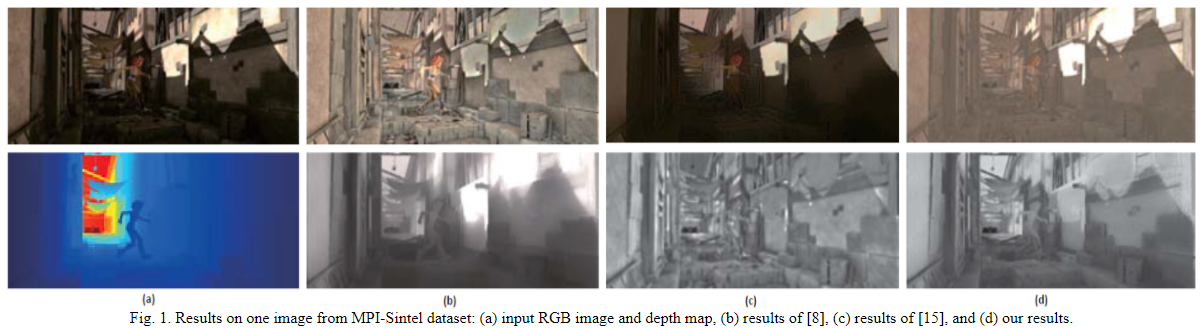

Dense Reconstruction from Monocular SLAM with Fusion of Sparse Map-Points and CNN-Inferred Depth

ABSTRACT

Real-time monocular visual SLAM approaches relying on building sparse correspondences between two or multiple views of the scene, are capable of accurately tracking camera pose and inferring structure of the environment. However, these methods have the common problem, i.e., the reconstructed 3D map is extremely sparse. Recently, convolutional neural network (CNN) is widely used for estimating scene depth from monocular color images. As we observe, sparse map-points generated from epipolar geometry are locally accurate, while CNN-inferred depth map contains high-level global context but generates blurry depth boundaries. Therefore, we propose a depth fusion framework to yield a dense monocular reconstruction that fully exploits the sparse depth samples and the CNN-inferred depth. Color key-frames are employed to guide the depth reconstruction process, avoiding smoothing over depth boundaries. Experimental results on benchmark datasets show the robustness and accuracy of our method.

Index Terms— Dense reconstruction, Visual SLAM, Monocular, Sparse map-point, Depth prediction.

SOURCE CODE

Opening soon. The source code is only for the non-commercial use.

PUBLICATIONS

[1] Xiang Ji, Xinchen Ye*, Hongcan Xu, Haojie Li, Dense Reconstruction from Monocular SLAM with Fusion of Sparse Map-Points and CNN-Inferred Depth. IEEE International Conference on Multimedia and Expo, ICME 2018, San Diego, USA. (CCF-B)

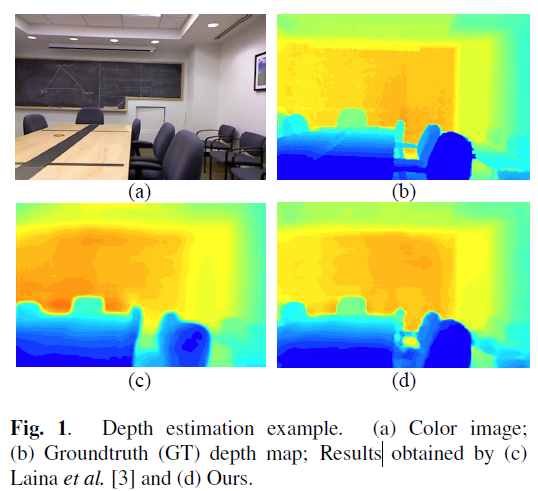

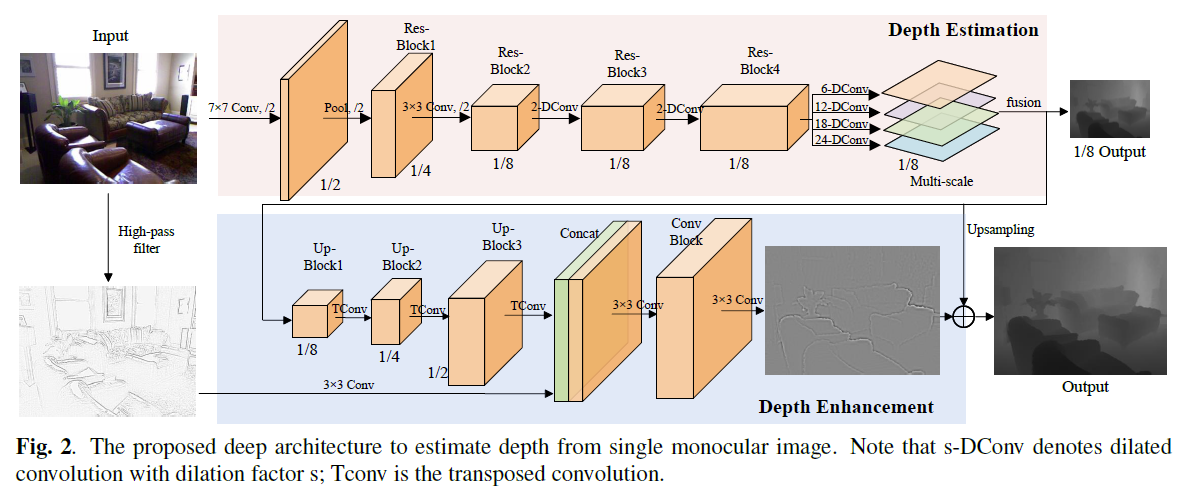

High Quality Depth Estimation from Monocular Images Based on Depth Prediction and Enhancement Sub-Networks

ABSTRACT

This paper addresses the problem of depth estimation from a single RGB image. Previous methods mainly focus on the problems of depth prediction accuracy and output depth resolution, but seldom of them can tackle these two problems well. Here, we present a novel depth estimation framework based on deep convolutional neural network (CNN) to learn the mapping between monocular images and depth maps. The proposed architecture can be divided into two components, i.e., depth prediction and depth enhancement sub-networks. We first design a depth prediction network based on the ResNet architecture to infer the scene depth from color image. Then, a depth enhancement network is concatenated to the end of the depth prediction network to obtain a high resolution depth map. Experimental results show that the proposed method outperforms other methods on benchmark RGB-D datasets and achieves state-of-the-art performance.

Index Terms— Depth Estimation, CNN, Depth Prediction, Depth Enhancement, Monocular

SOURCE CODE

Opening soon. The source code is only for the non-commercial use.

PUBLICATIONS

[1] Xiangyue Duan, Xinchen Ye*, Yang Li, Haojie Li, High Quality Depth Estimation from Monocular Images Based on Depth Prediction and Enhancement Sub-Networks. IEEE International Conference onMultimedia and Expo, ICME 2018, San Diego, USA. (CCF-B)

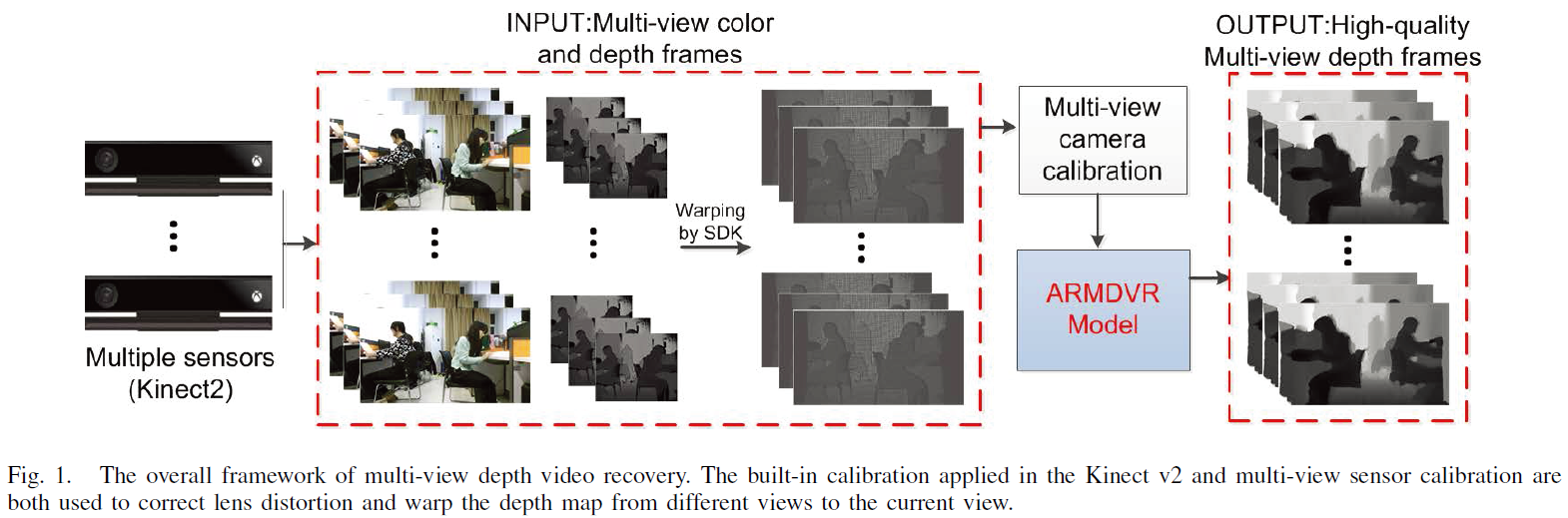

Global autoregressive depth recovery via non-local iterative filtering

Abstract—Existing depth sensing techniques have many shortcomings in terms of resolution, completeness, and accuracy. The performance of 3D broadcasting systems is therefore limited by the challenges of capturing high resolution depth data. In this paper, we present a novel framework for obtaining high-quality depth images and multi-view depth videos from simple acquisition systems. We first propose a single depth image recovery (ARSDIR) algorithm based on auto-regressive (AR) correlations. A fixed-point iteration algorithm under the global AR modeling is derived to efficiently solve the largescale quadratic programming. Each iteration is equivalent to a nonlocal filtering process with a residue feedback. Then, we extend our framework to an AR-based multi-view depth video recovery (ARMDVR) framework, where each depth map is recovered from low-quality measurements with the help of the corresponding color image, depth maps from neighboring views, and depth maps of temporally-adjacent frames. AR coefficients on nonlocal spatiotemporal neighborhoods in the algorithm are designed to improve the recovery performance. We further discuss the connections between our model and other methods like graph-based tools, and demonstrate that our algorithms enjoy the advantages of both global and local methods. Experimental results on both the Middleburry datasets and other captured datasets finally show that our method is able to improve the performances of depth images and multi-view depth videos recovery compared with state-of-the-art approaches.

Index Terms—Depth recovery, multi-view, auto regressive, nonlocal, iterative filtering

SOURCE CODE

Opening soon. The source code is only for the non-commercial use.

PUBLICATIONS

[1] Jingyu Yang, Xinchen Ye*, Global autoregressive depth recovery via non-local iterative filtering. Early Access, IEEE Transactions on Broadcasting, 2018 (中科院2区)

Other Related PUBLICATIONS

[1] Xinchen Ye, Xiaolin Song and Jingyu Yang*. Depth Recovery via Decomposition of Polynomial and Piece-wise Constant Signals. Visual Communications and Image Processing, 2016, Chengdu, China.

[2] Jinghui Bai, Jingyu Yang* and Xinchen Ye. Depth Refinement for Binocular Kinect RGB-D Cameras. Visual Communications and Image Processing, 2016, Chengdu, China.

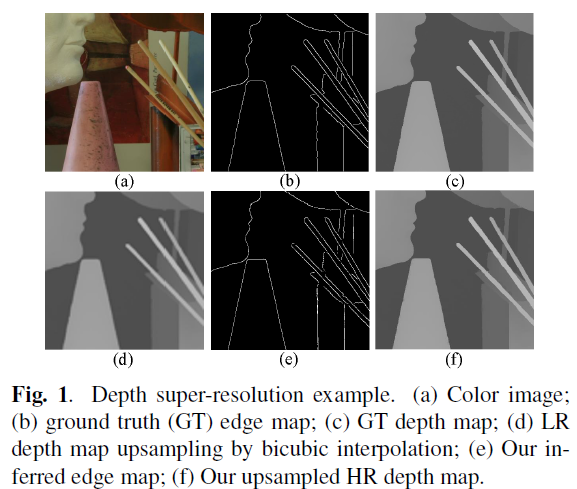

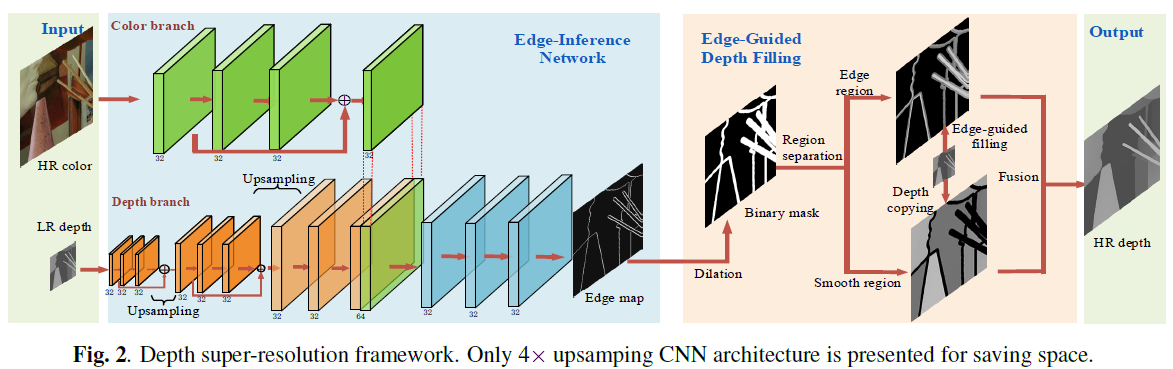

Depth Super-Resolution With Deep Eedge-Inference Network and Edege-Guided Depth Filling

ABSTRACT

In this paper, we propose a novel depth super-resolution framework with deep edge-inference network and edgeguided depth filling. We first construct a convolutional neural network (CNN) architecture to learn a binary map of depth edge location from low resolution depth map and corresponding color image. Then, a fast edge-guided depth filling strategy is proposed to interpolate the missing depth constrained by the acquired edges to prevent predicting across the depth boundaries. Experimental results show that our method outperforms the state-of-art methods in both the edges inference and the final results of depth super-resolution, and generalizes well for handling depth data captured in different scenes.

Index Terms— Super-resolution, depth image, edgeinference, edge-guided

SOURCE CODE

Opening soon. The source code is only for the non-commercial use.

PUBLICATIONS

[1] Xinchen Ye*, Xiangyue Duan, Haojie Li, Depth Super-Resolution With Deep Eedge-Inference Network and Edege-Guided Depth Filling. IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2018, Calgary, Alberta, Canada.(CCF-B)

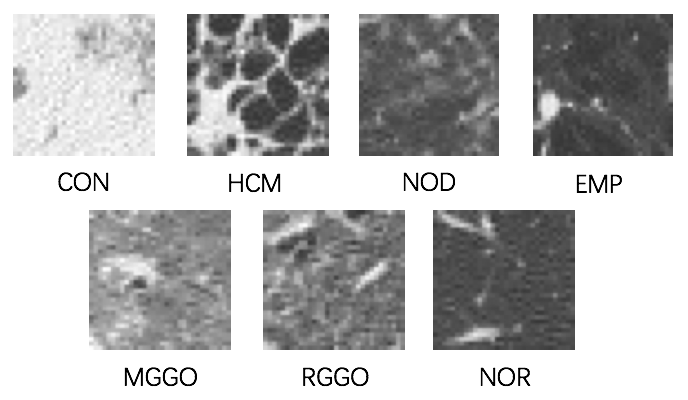

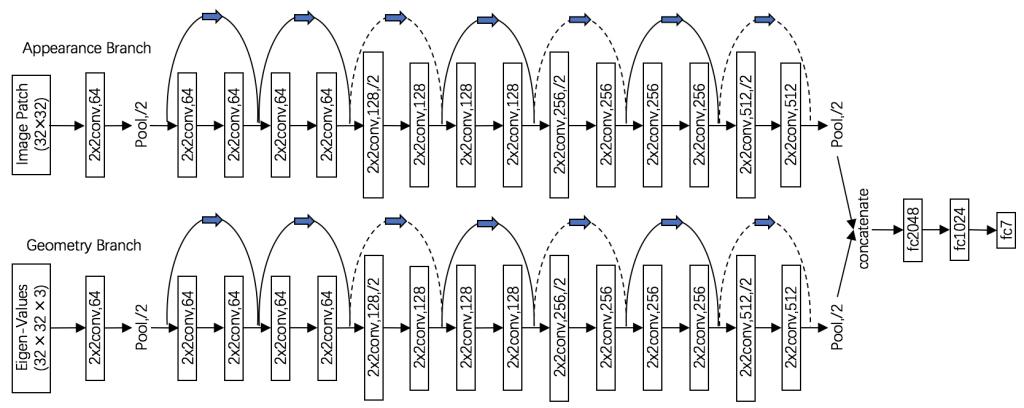

Pulmonary Textures Classification Using A Deep Neural Network with Appearence and Geometry Cues

ABSTRACT

Classification of pulmonary textures on CT images is essential for the development of a computer-aided diagnosis system of diffuse lung diseases. In this paper, we propose a novel method to classify pulmonary textures by using a deep neural network, which can make full use of appearance and geometry cues of textures via a dual-branch architecture. The proposed method has been evaluated by a dataset that includes seven kinds of typical pulmonary textures. Experimental results show that our method outperforms the state-of-the-art methods including feature engineering based method and convolutional neural network based method.

Index Terms— residual network, pulmonary texture, Hessian matrix, CAD, CT

SOURCE CODE

Opening soon. The source code is only for the non-commercial use.

PUBLICATIONS

[1] Rui Xu, Zhen Cong, Xinchen Ye*, Pulmonary Textures Classification Using A Deep Neural Network with Appearence and Geometry Cues, IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2018, Calgary, Alberta, Canada.(CCF-B)

[2] Rui Xu, Jiao Pan, Xinchen Ye, S. Kido and S. Tanaka, "A pilot study to utilize a deep convolutional network to segment lungs with complex opacities," IEEE Chinese Automation Congress (CAC), Jinan, 2017, pp. 3291-3295.

IDEA: Intrinsic Decomposition Algorithm With Sparse and Non-local Priors

World’s FIRST 10K Best Paper Award – Platinum Award

ABSTRACT

This paper proposes an new intrinsic decomposition algorithm (IDEA) that decomposing a single RGB-D image into reflectance and shading components. We observe and verify that, shading image mainly contains smooth regions separated by curves, and its gradient distribution is sparse. We therefore use L1-norm to model the direct irradiance component--the main sub-component extracted from shading component. Moreover, non-local prior weighted by a bilateral kernel on a larger neighborhood is designed to fully exploit structural correlation in the reflectance component to improve the decomposition performance. The model is solved by the alternating direction method under the augmented Lagrangian multiplier (ADM-ALM) framework. Experimental results on both synthetic and real datasets demonstrate that the proposed method yields better results and enjoys lower complexity compared with the state-of-the-art methods.

Keywords: Intrinsic decomposition, RGB-D, sparse, non-local

SOURCE CODE

Opening soon. The source code is only for the non-commercial use.

PUBLICATIONS

[1] Yujie Wang, Kun Li, Jingyu Yang, Xinchen Ye, “Intrinsic image decomposition from a single RGB-D image with sparse and non-local priors”, IEEE International Conference on Multimedia and Expo (ICME),.July 10-15, 2017, Hongkong, China. [pdf]

[2] Kun Li, Yujie Wang, Jingyu Yang, Xinchen Ye, “IDEA: Intrinsic decomposition algorithm with sparse and non-local priors”, submitted to IEEE Transactions on Image Processing, 2017.

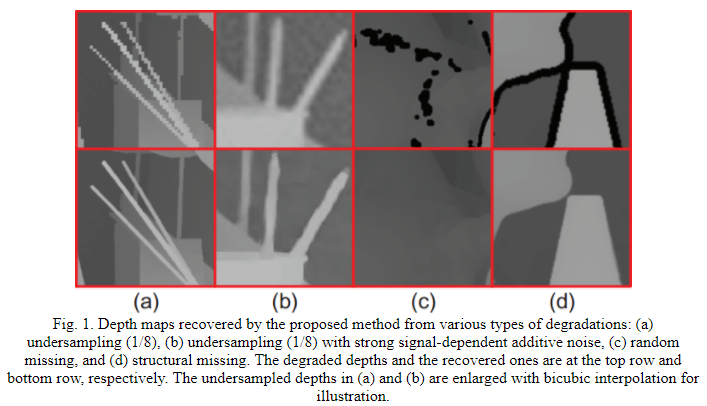

Color-Guided Depth Recovery From RGB-D Data

Using an Adaptive Autoregressive Model

Jingyu Yang, Xinchen Ye*, Kun Li, Chunping Hou, and Yao Wang

http://cs.tju.edu.cn/faculty/likun/projects/depth_recovery/index.htm

Abstract

This paper proposes an adaptive color-guided auto-regressive (AR) model for high quality depth recovery from low quality measurements captured by depth cameras. We observe and verify that the AR model tightly fits depth maps of generic scenes. The depth recovery task is formulated into a minimization of AR prediction errors subject to measurement consistency. The AR predictor for each pixel is constructed according to both the local correlation in the initial depth map and the nonlocal similarity in the accompanied high quality color image. We analyze the stability of our method from a linear system point of view, and design a parameter adaptation scheme to achieve stable and accurate depth recovery. Quantitative and qualitative results show that our method outperforms four state-of-the-art schemes. Being able to handle various types of depth degradations, the proposed method is versatile for mainstream depth sensors, ToF camera and Kinect, as demonstrated by experiments on real systems.

Keywords: Depth recovery (upsampling, inpainting, denoising), autoregressive model, RGB-D camera (ToF camera, Kinect)

Downloads

Datasets: Various datasets can be downloaded via the links attached to the following tables and figures.

Source code: download here

The degraded datasets in Table 1 can be obtained by downsampling the original high-resolution Middlebury datasets.

Publications

1. Jingyu Yang, Xinchen Ye, Kun Li, Chunping Hou, Yao Wang, “Color-Guided Depth Recovery From RGB-D Data Using an Adaptive Autoregressive Model”, IEEE Transactions on Image Processing, vol. 23, no. 8, pp. 3443-3458, 2014. [pdf][bib]

2. Jingyu Yang, Xinchen Ye, Kun Li, and Chunping Hou, “Depth recovery using an adaptive color-guided auto-regressive model”, European Conference on Computer Vision (ECCV), ....October 7-13, 2012, Firenze, Italy. [pdf] [bib]

Associate Professor

Supervisor of Master's Candidates

Main positions:IEEE member, ACM member

Other Post:None

Gender:Male

Alma Mater:Dalian University of Technology

Degree:Doctoral Degree

School/Department:School of Software Technology

Discipline:Software Engineering

Business Address:Teaching Building C507, Campus of Development Zone, Dalian, China.

Contact Information:yexch@dlut.edu.cn

Email : yexch@dlut.edu.cn

Open time:..

The Last Update Time:..