赞

赞

的个人主页 http://faculty.dlut.edu.cn/yexinchen/zh_CN/index.htm

Learning Scene Structure Guidance via Cross-Task Knowledge Transfer

for Single Depth Super-Resolution

Baoli Sun1, Xinchen Ye*1, Baopu Li2, Haojie Li1, Zhihui Wang1, Rui Xu1

1 Dalian University of Technology, 2 Baidu Research, USA

* Corresponding author

Code: https://github.com/Sunbaoli/dsr-distillation

Paper: ![]() Paper Download.pdf

Paper Download.pdf

Abstract

Existing color-guided depth super-resolution (DSR) approaches require paired RGB-D data as training samples where the RGB image is used as structural guidance to recover the degraded depth map due to their geometrical similarity. However, the paired data may be limited or expensive to be collected in actual testing environment. Therefore, we explore for the first time to learn the cross-modality knowledge at training stage, where both RGB and depth modalities are available, but test on the target dataset, where only single depth modality exists. Our key idea is to distill the knowledge of scene structural guidance from RGB modality to the single DSR task without changing its network architecture. Specifically, we construct an auxiliary depth estimation (DE) task that takes an RGB image as input to estimate a depth map, and train both DSR task and DE task collaboratively to boost the performance of DSR. Upon this, a cross-task interaction module is proposed to realize bilateral cross-task knowledge transfer. First, we design a cross-task distillation scheme that encourages DSR and DE networks to learn from each other in a teacher-student role-exchanging fashion. Then, we advance a structure prediction (SP) task that provides extra structure regularization to help both DSR and DE networks learn more informative structure representations for depth recovery. Extensive experiments demonstrate that our scheme achieves superior performance in comparison with other DSR methods.

Method

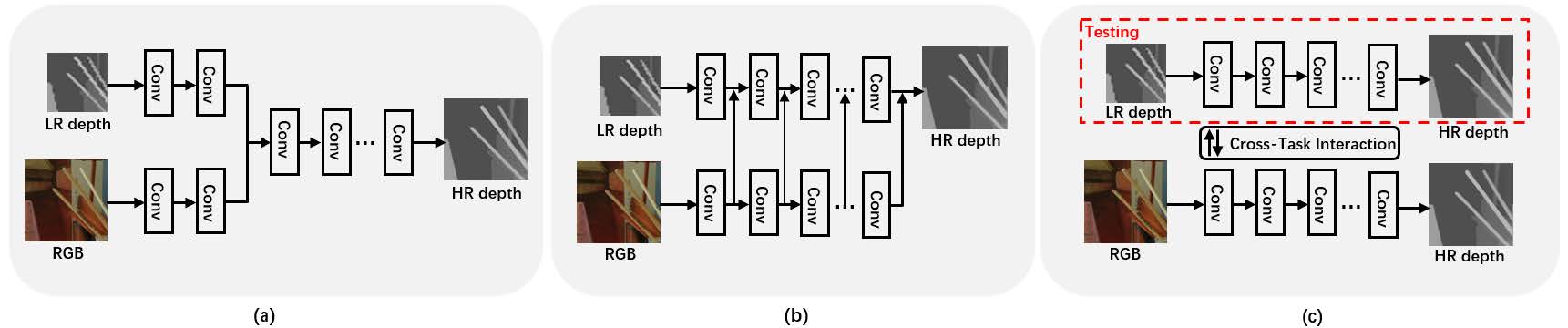

Figure 1. Color-guided DSR paradigms. (a) Joint filtering, (b) Multi-scale feature aggregation, (c) Our cross-task interaction mechanism to distill knowledge from RGB image to DSR task without changing its network architecture.

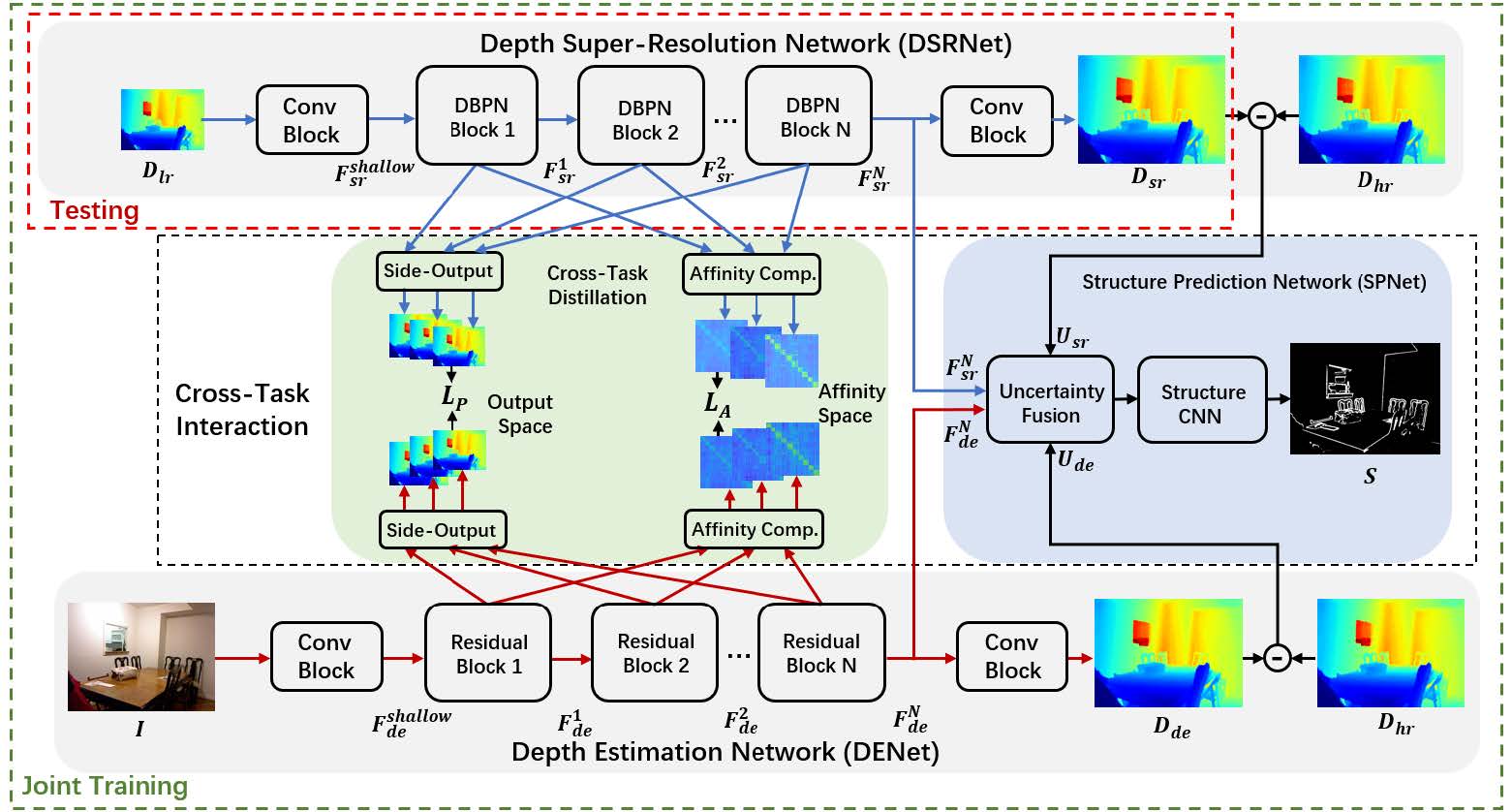

Figure 2. Illustration of our proposed framework, which consists of DSRNet, DENet, and the middle cross-task interaction module. In testing phase, DSRNet is the final choice to

predict HR depth map from only LR depth map without the help of color image.

Result

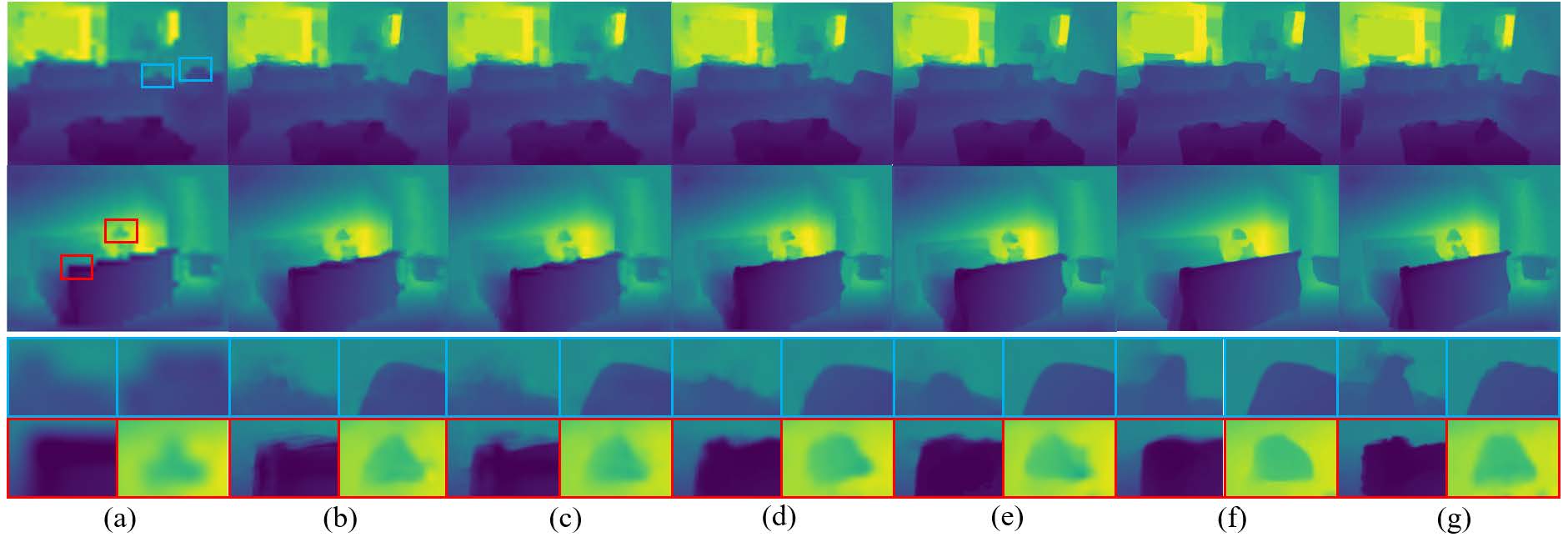

Figure 3. Visual comparison of x8 DSR results on Art and Dolls in Middlebury.

Figure 4. Visual comparison of x16 DSR results on NYU v2 dataset.

Citation

Baoli Sun, Xinchen Ye*, Baopu Li, Haojie Li, Zhihui Wang, Rui Xu, Learning Scene Structure Guidance via Cross-Task Knowledge Transfer for Single Depth Super-Resolution, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021

@article{Sun2021cvpr,

author = {Baoli Sun, Xinchen Ye, Baopu Li, Haojie Li, Zhihui Wang, Rui Xu},

title = { Learning Scene Structure Guidance via Cross-Task Knowledge Transfer for Single Depth Super-Resolution},

booktitle = {Proc. Computer Vision and Pattern Recognition (CVPR)},

year={2021},

}